Today, the content is an older post from 2019, but still valid when looking at the polemic of decision-making and the goals for decision-making. The text is intended to show that the task of AI is not to water the flowers, but to support their healthy growth in the full spectrum of habitat needs.

Practically every week, a new experiment with deep learning and data corpus acquisition can be found, which analyzes, predicts, translates, and suggests… Coverage is becoming comprehensive. Thanks to the decrease in technology prices and the basic sharing of deep learning principles, more scientific groups are engaging in experiments, and the commercial sphere is not lagging behind. Even for a small company, it is possible to set up a server that meets the requirements of machine learning and cultivate its data corpus on available samples. But what is lacking today are experts in machine learning and also a selection of ready-made solutions. Modulations that we could immediately incorporate and save time in other research axes. Are we really at the very beginning?

To avoid going too far in the example, one cell of research involves the deployment of shared deep learning with the aim of collaborating A.I. in the symptomatic management of a botanical greenhouse with subsequent resolution of xBiotopes, where the size of the biotope no longer matters.

In the example, there are aphids at the beginning and at the end, a data corpus collaborating with processing and information retrieval modules, enabling further layers of learning for user engagement on their educational basis.

I have been playing with this idea for a long time, and money for a server and people is still missing 🙂 Little things in the researcher’s world 🙂

Which does not prevent describing the principle, which can be deduced from today’s principles achieved in the field of AI, and it is not even about Sci-Fi; the only thing missing in today’s reality is available computing power…

Aphids are an excellent example of manifestations in nature, which have a multispectral impact on learning, and the acquired data corpus is destined for development in areas of other parasites, cataloging manifestations on various plant species, and linking acquired information with the data world of encyclopedias.

At the beginning, there was a photo… and it was an Aphid

This part of the block should be about education, but there is enough description of deep learning to understand the basic principle, and I allow myself to stick with a layman’s view.

Teaching a data corpus through machine learning to see and recognize aphids is easy and imaginable. All it takes is thousands of pre-prepared photos, several video sequences, and… I’m really simplifying it, but still, teaching a computer to see aphids today is an easy matter.

If you doubt it, just look here, for example: https://developers.google.com/machine-learning/crash-course/ or at older texts here: https://medium.com/@ageitgey/machine-learning-is-fun-80ea3ec3c471.

Google on the Machine Learning pages shows how to think about preparing resources and how to avoid problems right at the beginning, and why fewer goals are better than more.

There is no need to doubt for a moment that in education, I would prefer to involve the whole garden, greenhouse, aquarium, prepare any biotope for acceleration… and involve a camera system, home control, photovoltaics… but even if I omit the necessary hardware background to shorten the processing time, there are so many disparate inputs and outputs that the result cannot even be estimated.

It is necessary to start from scratch and design Learning Direction Goals so that only the boundaries of learning are expanded. The greater the connection with the existing acquired data, the faster the development and grasp of the context.

Another way is the layering of skills, for example, a trained deep learning system with the collection of image data in a botanical garden can easily be expanded with sensors at the input, which will provide the system with additional contextual data in a deeper layer of context. Artificial intelligence can thus monitor the work of automation, semi-automation, and human intervention and learn.

Automation will soon be replaced because irrigation timers, lighting, air conditioning, and heating will learn to control based on available sensors, and then the roles will be reversed, the human being will control the work of the neural network with the same observation sensors.

Aphids as the basis for the neural system of symptomatic technology

I like aphids for tests about as much as geneticists like fruit flies. They are easy to keep, breed, and transfer to new biotopes. Aphids are slow, so it’s easy to take extreme photos of all stages of life manifestations. Development from an individual to a colony, subsequent growth of the winged form, which flies a little further, discards its wings, and everything continues in a new place. It’s so easy to observe the colonization of plants and the gradual occupation of rosettes and branches, all the way to aphid plains on leaves or pear aphids on flowers and young fruits.

That is, easy preparation of comprehensive samples for initial differentiation of “leaf” and “aphid”, through “plant” and “aphid”, when we are already working with parts of plants and various details of the whole plant, up to targeted recognition of aphids even on the underside of leaves in the shade because the leaf curls according to the learned manner of aphid infestation manifestations during observation of an unaffected biotope by a camera system and targeted inoculation with aphid seedlings.

Treatment is also easy with many available means, including a wide range of organic solutions. The goal of the neural network is to protect plants, not just to recognize aphids, so manifestations leading to plant destruction due to pests must be accompanied to a large extent by the “application manifestation” of the protective agent and subsequent display of the healed plant as the goal.

Aphids are always accompanied by two other life forms that are so different that they cannot be mistaken for aphids, and both are covered by the existing curriculum. As a bonus, one life form collaborates with the plant, and the other with the aphid… Do you already know which ones?

Ladybug and Ant

When artificial intelligence at today’s level of deep learning shows us the initial manifestation of a parasite with subsequent unwanted colonization aimed at plant destruction with the established goal of “plant protection,” let’s assume that such a system will alert us as soon as optical systems detect aphids, even the first one. Whether it’s flying or carried by an ant.

Ants and aphids really cannot be mistaken, but at the beginning of the training, part of the pixelation would interfere with each other. However, it is important to observe ants that tend aphids. The subsequent bonus is several species of ants within the reach of optical systems monitoring the plant environment.

Another direct companion is the seven-spot ladybird, whose ranks have expanded, reportedly gradually replacing the more aggressive Asian form.

A.I. thus has several varieties available and can “monitor which ladybirds,” which is a small bonus compared to observing aphid elimination by ladybirds. This moment of capture interests me greatly because the neural network must evaluate the ladybird as a positive means of treatment protection. Moreover, it must protect plants and ladybirds and ultimately warn against the application of a protective agent with a reasonable possibility of a bio solution. The ladybird, with its shape and shields, affects other teaching and data development topics.

Another creature collaborating in the open nature is the rove beetle, which with its shape and manifestations again expands the distinguishing layer in insect areas. Supplementing the spectrum of information is, for example, replacing the flying form of aphids with the dark-winged fungus gnat, which also significantly damages plants and whose initial stage is larval in the substrate.

At this stage, we could get a neural network capable of locating basic insect manifestations on plants and studying them with the aim of distinguishing insect species according to behavior manifestations on different plants. Another related branch, which is currently weaker, is teaching the corpus in plant knowledge.

The initial dataset contains only basic parameters of plants and appearances. It is likely that the neural system will reveal the difference between the leaves of a beefsteak tomato and the robust leaves of a hairy pepper. It will also reveal relationships between the plant’s structure and the pattern of infestation, thus dividing plants into priority for monitoring and controlled.

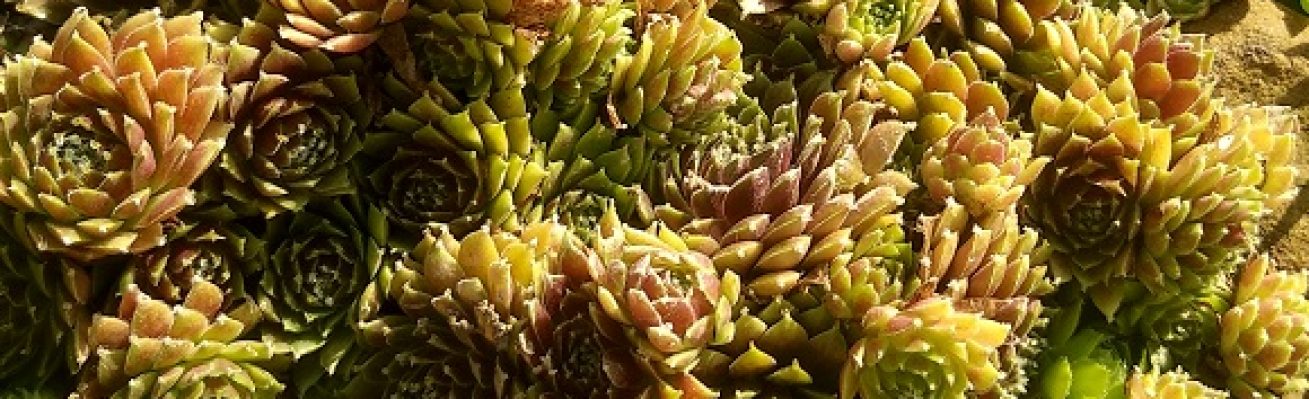

Thus, the neural system is expected to learn on its own that if a strawberry or tomato plant is within reach, there will be no aphids on cacti, and input data from succulent biotopes will lead to different data inputs than aphids. Other groups of parasites that the system should already learn to localize will be found.

So far, however, there has been no connection of symbols; artificial intelligence has learned to recognize insects and plants, with the bonus of mold manifestations, but that’s all. It found, recognized, and identified “objects,” mapped the context, and according to the goal, alerted to harmful objects. It also monitors other manifestations and learns. Connecting objects with concepts and information about them for teaching is another goal, and let’s move on to the next step…

Environmental control sensors

As I mentioned earlier, the goal is symptomatic biotope management, which includes not only optical monitoring but also environmental system control involving work with sensors and control elements.

If you wonder why I didn’t start with this simpler process, for now, accept the idea that it is really easy to teach a neural system to control lighting, irrigation, temperature… but at the beginning, it’s just a matter of working with switches. There’s no thought in it. If the neural network will monitor the control of the environment, it will only learn to control the environment.

Control of the environment is thus a layer only in symptomatic plant health exploration. Thanks to sensors, the neural network monitors timers and machines, thus taking over the basic teaching scheme according to the given pattern and adding additional context.

The manifestation of drought is also a symptom of plant damage unrelated to pests or fungus, so it will easily learn “dry condition” as a consequence of not watering and, conversely, fungus and wilting of plants when overwatering. Thus, over sensors and control, it adds context “for development” of plants with regard to symptomatic manifestations verified by the sensor system. This is important because extensive botanical exhibitions require zonal care rather than blanket application of support as in small biotopes, just as in a small greenhouse, there is a difference between drip and periodic irrigation.

The goal of this part is for Artificial Intelligence to learn to work with zonal environmental influence and, according to symptomatic manifestations, eventually be able to add irrigation where needed in specific targeted areas. It also controls other means of controlling the environment of enclosed biotopes. Rather bounded, because just like a greenhouse, like a botanical garden in the whole range of exposure, such a facility can control public greenery and public lighting, collect information from local consumption, and plan switching regimes within the use of renewable energy sources, and this is not even future music, it’s just deploying a learning and testing environment outside the screen for real demonstrations.

Original text: https://mareyi.cz/neuralni-site-pri-rizeni-biotopu-a-prostredek-podpory-zdraveho-rustu-rostlin/